Tunga – Autonomous Unmanned Ground Vehicle

Tunga is an autonomous unmanned ground vehicle (UGV) built for outdoor missions such as obstacle courses, waypoint navigation and vision-based tasks. It combines a ROS 2 software stack with modular perception, planning and control layers, enabling both remote teleoperation and fully autonomous driving. The platform targets student competitions, research projects and field robotics experiments.

Technologies

Experience

Tunga is an autonomous unmanned ground vehicle (UGV) developed as a testbed for field robotics, competition tasks and research in autonomous navigation. The platform is designed to operate in semi-structured outdoor environments, handling uneven terrain, obstacles and mission-specific markers. At the core of Tunga is a ROS 2–based software architecture running on Ubuntu. Perception modules use camera and sensor data together with OpenCV and deep-learning models to detect lanes, signs, obstacles and target markers. Localization and mapping rely on odometry and, where available, additional sensors to keep track of the vehicle’s pose along the course. On top of this, a planning layer generates safe paths and maneuvers, while a control layer drives the motors to follow trajectories smoothly and reliably. Tunga supports multiple modes of operation: full autonomy, semi-autonomous assistance and manual teleoperation. In autonomous mode, the vehicle follows predefined waypoints, reacts to obstacles, respects course rules and completes tasks without direct human intervention. In teleoperation mode, an operator can control the platform over a wireless link using a user interface that visualizes live camera feeds, status information and mission data. The mechanical and electrical design focuses on robustness and modularity: a rigid chassis, dedicated power distribution, motor drivers and sensor mounts make it easy to iterate on hardware. On the software side, components are organized into ROS 2 nodes for perception, planning, control, communication and UI, enabling parallel development and clean integration. Simulators such as Gazebo or Unreal Engine can be used to prototype algorithms before deploying them to the real vehicle. Overall, Tunga serves as a practical, extensible platform for learning and experimenting with autonomous ground robots. It is suitable for competition teams, university labs and engineering students who want hands-on experience with real-world robotics, from low-level motor control up to high-level autonomy algorithms.

Feature

Autonomous navigation

Tunga follows waypoints, avoids obstacles and completes course tasks using a layered navigation stack built on ROS 2.

Feature

Perception and computer vision

Camera and sensor data are processed with OpenCV and AI models to detect lanes, signs, obstacles and mission-specific markers.

Feature

Modular control architecture

Separate ROS 2 nodes handle perception, planning, control and communication, making the system easier to extend and debug.

Feature

Teleoperation and UI

An operator dashboard provides live camera feeds, telemetry and manual control when needed, enabling safe testing and mixed autonomy.

Feature

Competition-ready platform

Designed around the requirements of student competitions and field robotics projects, balancing robustness, flexibility and cost.

3D preview

Media gallery

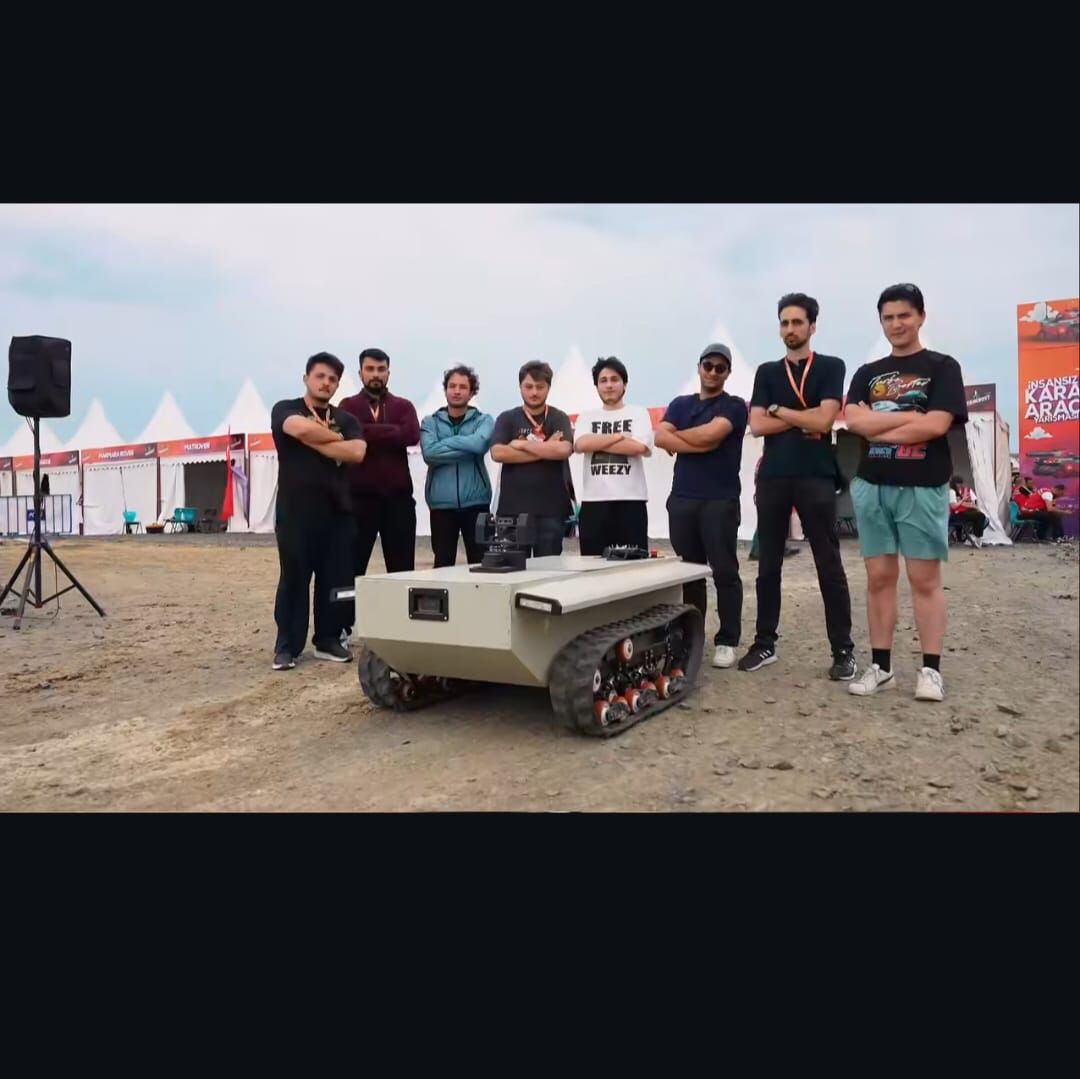

Team

Open a profile card to see the full member bio.